This post is part of the series Building your own Software Defined Network with Linux and Open Source Tools and covers the re-designed topology of the distributed infrastructure.

From a birds eye perspective, the Freifunk Hochstift infrastructure mainly consists of three building blocks:

- Distributed servers hosted in data centers in Paderborn and remotely in Germany providing infrastructure services

- Wireless backbones within the city centers of Paderborn, Delbrück, etc.

- Freifunk nodes at homes, shops, enterprises, or elsewhere

This post will focus on the distributed servers as well as the wireless backbones and will only cover the around 1.000 client nodes from the perspective of connecting them to the backbone (“gateways“).

With all the things mentions in Specifics and history of a Freifunk network in mind I got back to the drawing board and thought about a new design.

Design goals

Dual-Stack + OSPF/iBGP everywhere

In contrast to the strong focus on B.A.T.M.A.N. adv. in the previous network design, the focus of the new design was on creating an IP backbone with small broadcast domains and Dual-Stack everywhere. The (quite painful) experience with OSPF in a large layer2-based VPNs was a strong motivation for building point-to-point links from now on and build automation for doing so.

Former experiments with OSPF and filtering were as troublesome as one could expect (after having some experience with networking :-)) and came back hounting me now and then. Therefore the new design should use OSPF and iBGP for distribution of routing information, implementing a clear separation between loopback reachablity + in-band management and production prefixes.

POPs not connected by wireless links should be connected using point-to-point VPN links which allow the use of dynamic routing protocols on top of it.

Multiple Uplinks

The distributed infrastructure will have separate uplinks to the Freifunk Rheinland backbone and should implement hot-potato routing to use the nearest exit.

Each POP having meaningful uplink capacity will host a border router. All systems local to that POP – probably a virtualization hosts and VMs running on it – should use this local uplink. If the local uplink isn’t operable for whatever reason, but the POP is still connected to the Freifunk Hochstift network, a border router at another POP should be used. By the means of OSPF costs it should be possible to influence what backup paths will be used.

Flexibility and Scalability

The new infrastructure should be as flexible as possible, e.g. it should be possible, to run infrastructure services at any location or even at multiple locations at the same time (read: anycasted). The setup should scale up to lots of wireless backbone POPs, a sane number of distributed virtualization hosts and some gigabits of traffic. (Having quite a lot of VPN connections over the public Internet instead of VLLs / waves etc., this isn’t as trivial as it sounds.)

Every B.A.T.M.A.N. site everywhere

The article about Specifics and history of a Freifunk network introduced the conepts of regions and sites. Each site translates into an instance of the B.A.T.M.A.N. adv. mesh protocol. Each B.A.T.M.A.N. site will be operated by two ore more gateways for redundancy and performance reasons. Within the new infrastructure there should be up to 6 gateways at different locations running multiple B.A.T.M.A.N. sites. It should be possible to configure any n:m mapping between gateways and B.A.T.M.A.N. sites.

To fully exploit the advantages of the wireless backbone(s) operated in the Paderborn and Warburg area all backbone routers should be able to carry any number of B.A.T.M.A.N. sites as well to allow connecting suburban sites to the gateways.

Fully automated / SDN

No configuration file within the whole infrastructure should be edited manually on the target system. Every part of any configuration which can be programmatically generated should be generated.

Topology and design choices

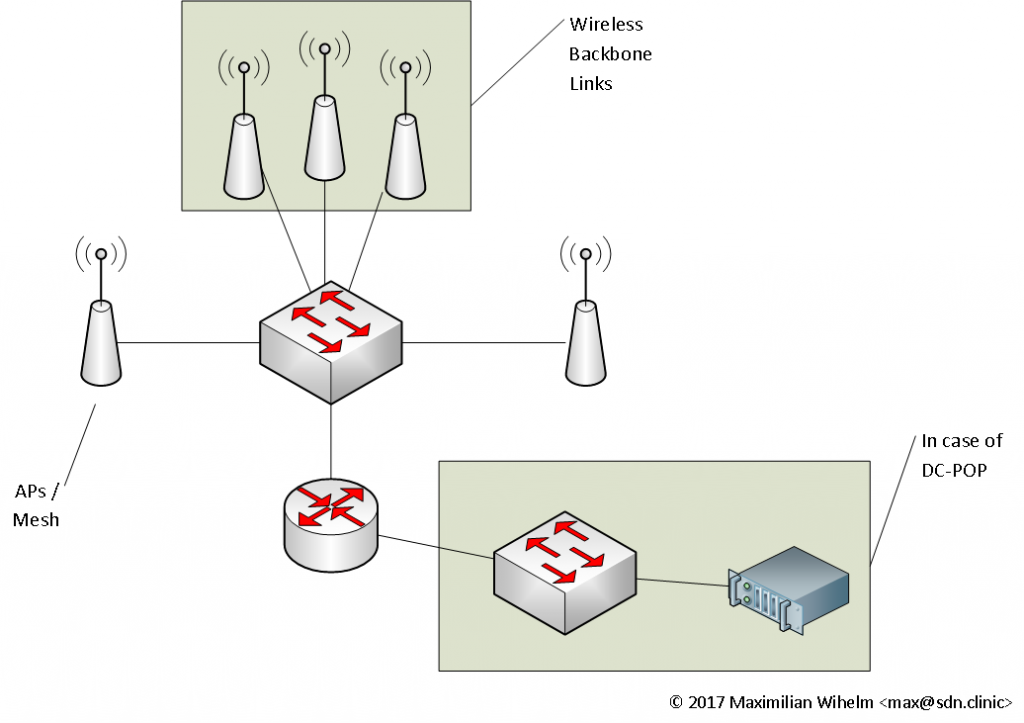

With all these goals in mind we ended up with the following network topology for POPs connected to the wireless backbone:

From top to bottom there are some wireless backbone links providing transparent layer2 bridges to adjacent backbone POPs. The wireless devices are connected to a WISP switch providing Power-over-Ethernet (PoE), VLANs and SNMP capabilities. VLANs are used to separate the connections to adjacent backbone POPs, the management network as well as local B.A.T.M.A.N. adv. and/or client network breakouts.

The backbone router (BBR) terminates all VLANs and will form OSPF adjacencies to all adjacent BBRs. Each two adjacent BBRs use one unique VLAN (VLAN id 20xy) to form a PTP L3 connection (over a logical PTP L2 connection). Each wireless PTP VLAN is configured with a /31 prefix for IPv4 and a /126 prefix for IPv6. All prefixes used for wireless links are assigned from a defined pool on a use-first-free-prefix basis.

Depending on the POPs location and geography there are some wireless access points deployed outside or within the building to provide access to the Freifunk network. At locations where it seems useful there are entry points to the mesh network as well. The access network is configured with the same VLAN id everywhere (100 + site-id) as is a mesh breakout (200 + site-id). Those VLANs are local to the POP and are not exposed via any wireless backbone links. That way we can safely reuse the same VLAN ID for all POPs.

Currently two POPs in the city of Paderborn are on the roof of a data center where we are allowed to house servers (which we use as virtualization hosts for infrastructure services). This of corse is the best case scenario and all a Freifunker could wish for. The network segments used for servers and VMs are also exposed to the OSPF/iBGP routing domain, so we have full IP reachibility between all our systems.

See the VLAN page within the FFHO wiki for a complete nomenclature of all VLANs.

Management networks

With a real IP backbone the former global management network/VLAN has been teared down in favour of one in-band management network/VLAN per POP. This obviously introduces a dependency (SPOF) on the POPs router for the management of devices local to that POP. Setting up real out-of-band management would require additional hardware at each POP and realistically is not possible for many reasons – mainly cost – so it wasn’t consider further.

To mitigate this SPOF the local management VLAN is exposed to each adjacend POP via the WiFi backbone links. This way in the case of an outage of POP A‘s router, two things would have to be done to reconnect the management interfaces of all devices at POP A to the network: Configure management VLAN A on the switch at POP B and configure an SVI for A’s management network on POP B‘s router. This safety net already came in handy at some occurences 🙂

Each management VLAN corresponds to a /24 prefix which is assigned from a defined /16 pool on a use-first-free-prefix basis.

VPNs

The point-to-point VPN links are currently realized with layer2 OpenVPN tunnels. That way OpenVPN only cares about transporting Ethernet frames / IP packets from one side to another and routing could be controlled by bird. Each tunnel is configured with a /31 prefix for IPv4 and a /126 prefix for IPv6. All prefixes used for VPN links are assigned from a defined pool on a use-first-free-prefix basis.

The assignment of IP address on the tunnel interfaces is done by ifupdown(2) which is called by OpenVPN on interface up and down events.

2023 Update: VPN tunnels have been migrated to use Wireguard as it’s easier to set up, recovers much faster, and can carry much higher throughput compared to OpenVPN, especially on the APU systems.

IPv6 /126 prefix length

The prefix length of /126 has been chosen to facilitate the principle of least surprise. As Linux systems (read: iproute2) strip away any trailing zeros in IPv6 addresses when displaying them, an address of 2a03:2260:2342:abcd::0/127 would be shown without the last 0 which might lead to wrong conclusions when debugging. Using prefix::1/126 and prefix::2/126 this potential (cosmetic) issue can be circumvented. As the whole /64 is reserved anyway that’s not a problem with regards to address space usage. Even if we were to use adjacent /126s from the same /64 block a 2x increase shouldn’t make much of a difference, especially not at our scale.

B.A.T.M.A.N. adv. overlays

The (wireless) backbone must be able to transport multiple B.A.T.M.A.N. adv. instances in parallel. As B.A.T.M.A.N. adv. is a layer 2 protocol there basically are only two options for achieving this:

Creating a separate VLAN for every connection between two B.A.T.M.A.N. adv. routers for every site or using some overlay mechanism to transport the B.A.T.M.A.N. adv. traffic over the IP network. The first option does not scale at all and would create a VLAN management mess and the routers as well as on the switches, so option two remains. Creating an overlay network is something which is in most network engineer’s genes and therefore comes naturally 🙂

There are some choices for building a PTP layer 2 overlay over an IP network:

- MPLS Layer2 VPN

- OpenVPN with tap interfaces

- GREtap

- VXLAN

- etc.

MPLS for Linux wasn’t a thing back in the days and to the best of my knowledge doesn’t provide layer 2 services in current implementations either, so isn’t an option. OpenVPN would generate a lot of context switches as it’s a user-space application and crypto would not be needed on the wireless backbone (to be fair, a NULL-cipher could be used), so isn’t ideal either. Many people complained about problems and oopses with GREtap tunnels when I was redesigning the backbone which discouraged their use.

VXLAN was already a thing and a friend threw RFC7348 at me and it turned out, it was already implemented in the Linux kernel and in iproute. After playing around with it, I was set. Trying to add support for VXLAN to Debians ifupdown seemed possible and a quick test was promising when the same friend threw Cumulus Networks ifupdown2 in the mix which is a rewrite of ifupdown in Python and the capability to write add-ons. It came with a VXLAN add-on already present.

So we ended up encapsulating B.A.T.M.A.N. adv. traffic between backbone nodes into VXLAN. One VTEP for each PTP connection for each site. All VNIs and Multicast IPs are calculated from underlying PTP VLANs and the site ID.

Read on in the next post in this series about Hardware platforms of a Freifunk network.