This post is part of the series Building your own Software Defined Network with Linux and Open Source Tools and covers the specifics of a Freifunk network as well as the history for the Freifunk Hochstift network which led to the latest re-design.

From a birds eye perspective, the Freifunk Hochstift infrastructure mainly consists of three building blocks:

- Distributed servers hosted in data centers in Paderborn as remotely in Germany providing infrastructure services

- Wireless backbones within the city centers of Paderborn, Warburg, etc.

- Freifunk nodes at homes, shops, enterprises, or elsewhere

This post will focus on the distributed servers as well as the wireless backbones and will only cover the around 1.000 client nodes from the perspective of connecting them to the backbone (“gateways“).

Specifics of a Freifunk network

One thing that separates a Freifunk network from a general access or service provider network is the use of mesh protocols like B.A.T.M.A.N. advanced (Better Approach To Mobile Adhoc Networking) [open-mesh Wiki, Wikipedia]. B.A.T.M.A.N. adv. is a layer2 based mesh protocol for mobile ad-hoc networks and provides an ethernet overlay network over ethernet underlays and uses broadcast messages for neighbor information exchange.

B.A.T.M.A.N. adv. uses special nodes, called gateways, as central nodes which usually provide access to the internet and a DHCP service. Within B.A.T.M.A.N. adv. each regular node calculates it’s best gateway – having the highest Transmit Quality (TQ) / smallest hop penalty – and will relay all DHCP requests to that gateway, which in turn will usually present itself as the default-router to any DHCP clients.

In a Freifunk network the B.A.T.M.A.N. gateways are usually provided by the community – Freifunk Hochstift in this case – and the regular nodes are operated by all the people willing to participate in the mesh network. The gateway machines usually also provide a L2 based VPN service (usually realized by fastd or – nowadays – L2TP) which is used by distributed nodes to connect with the mesh network.

History

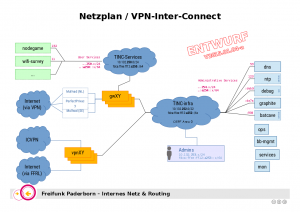

When the infrastructure re-design started back in 2015 we only had one own virtualization host and used quite some sponsored VMs for infrastructure services. At the time we had build a network heavily relying L3-VPNs based on tinc, like shown in the network plan below. Each filled rectangle represents a virtual machine.

The infra VPN connected all virtual machines providing infrastructure services with all gateways and core routers. All gateways (and core routers, even as not shown in the diagram) where also connected to a second user services VPN where anyone could provide a service for the Freifunk network. Operators could connect to the infra VPN and have access to the whole network.

Now one might wonder, how it’s possible, to use OSPF within a L3 based tinc VPN? It isn’t. In the early days the routing was done be the means of tinc only. As indicated in the network plan, one idea was to use L2 based tinc VPNs and use OSPF for internal routing. This – as I had been warned before, but were too young to listen – turned out to be a bad idea and didn’t really work that well.

Now one might wonder, how it’s possible, to use OSPF within a L3 based tinc VPN? It isn’t. In the early days the routing was done be the means of tinc only. As indicated in the network plan, one idea was to use L2 based tinc VPNs and use OSPF for internal routing. This – as I had been warned before, but were too young to listen – turned out to be a bad idea and didn’t really work that well.

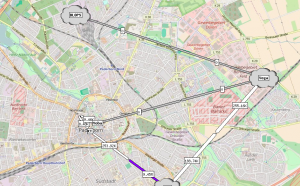

The wireless backbone network within the city of Paderborn was another problematic area. In the early days we had a small number of wireless backbone links (WBBL) and wanted to connect all POPs via B.A.T.M.A.N. adv. to extend our mesh network using the wireless backbone. The goal was – and still is – to provide entry points for users at each location, to try to minimize the B.A.T.M.A.N. adv. VPN traffic.

To create virtual point-to-point links between the B.A.T.M.A.N. adv. routers at each end of the wireless links we used VLANs. Each wireless link got it’s unique VLAN id (2300 + link-id) for the B.A.T.M.A.N. traffic. As we only had one big B.A.T.M.A.N. adv. network this seemed to be a straight forward idea at the time. For the management of the wireless devices and switches etc. we used one global VLAN, which obviously turned out to be a bad idea and led to another VLAN per wireless link which carried IP traffic, to create one management network per POP. That’s when the routing thing started. The topology looked something this:

Site split ahead

As the network kept growing, we felt the urge to split up the B.A.T.M.A.N. adv. network into smaller networks, to minimize the broadcast domains and reduce the high amount of control traffic noise (B.A.T.M.A.N. adv. itself, ARP, ND, IGMP, mDNS, etc.). I drew up some ebtables filters to do some uRPF filtering on the nodes to mitigate the effect and prevent misconfigured nodes from spamming the network, but this couldn’t solve the issue. Other Freifunk communities already had split up their large network into smaller ones and reassured us, it was an undertaking worth the effort.

While planning the so called “site split” we used a map of our “service region” and carved out 26 non-overlapping regions as the smallest possible mesh networks. We then combined some of these adjacent regions to a site which would become one instance of a B.A.T.M.A.N. adv. mesh network with around 100 to 150 nodes. If a site would start to outgrow these limits in the future, we would split it up again. This left the upper bound for the number of B.A.T.M.A.N. adv. networks to 26 and required a scalable network concept.

At the time, two gateways were located in data centers in Paderborn connected to the WiFi backbone and we planned for a much bigger WiFi backbone within the city and the suburban areas. With the new idea of sites, the city of Paderborn was one site (“pad-cty“) and all surrounding suburbs were in a different site, so the new backbone would have to been able to carry multiple B.A.T.M.A.N. adv. networks in parallel to allow connecting suburbs to the gateways. (The gateways would need to be able to serve multiple B.A.T.M.A.N. adv. sites as well.) Sticking to the idea of using VLANs to connect B.A.T.M.A.N. adv. sites as described above would lead to a lot of VLANs and a lot of manual interaction with all switches etc. Meh. But there’s hope.

Störerhaftung

Back in the days every gateway had a direct VPN connection to a commercial VPN provider, to tunnel all internet facing traffic to, due to the German Störerhaftung. Störerhaftung basically means that the entity providing the Internet access is held responsible for all civil offenses anyone using this connection might have done. As the Freifunk communities didn’t want to be held responsible for anything basically anyone could have done over the Freifunk network, nearly everyone used VPN services terminated outside Germany.

As using commercial VPN services didn’t scale at all Freifunk Rheinland became an ISP + LIR and for some years now provides transit services for all Freifunk communities in Germany willing to connect. Details about that will be covered in a future post. For the impatient there is a talk Philip Berndroth and I gave at RIPE74 this year about the history of the Freifunk Rheinland backbone AS201701 (slides, recording).

The Topology of a Freifunk network article is the next post in this series and goes into details on what we actually designed and why.