With WireGuard having found its way into the Linux kernel and all major distributions out there, we’ve got a pretty powerful new card into our decks, that we can play when we’re in need of a VPN solution. The website emphasizes the aim for performance, simplicity, and avoiding headaches, which reality seems to mostly agree with.

As long as WireGuard required to build kernel modules, e.g. by using DKMS, I wasn’t very interested (as I’m not a fan of compilers present on my servers). However, as this need went away, I got curious.

Let’s migrate from OpenVPN

I’ve been a big fan of OpenVPN for years and always found ways to make it do everything I wanted, even the funny things. Noteworthy endeavors being Layer2 bonding over multiple OpenVPN instances, to overcome the single core limit (around 2013), or making it work with VRFs.

Since the redesign of the Freifunk Hochstift network, we’ve used OpenVPN for all inter-site VPNs. With most routers being APU2 units with 4 CPU cores operating at 1GHz, this roughly translated to 35Mb/s of throughput through one OpenVPN tunnel. DCO looked promising back then, however this would also have required compilers on most routers or central kernel/module management, which made it a non-starter for a larger fleet.

When moving more towards routed setups and connecting sites with higher bandwidths, we were in need of a solution though. Ideally, this new setup would come without running multiple tunnels and do bonding or ECMP – that’s where WireGuard came in. As our infrastructure is designed with VRFs for separating the internal network from the Internet, this required sending and receiving encapsulated tunnel traffic over a VRF. This needed some investigation.

Recap, why VRFs?

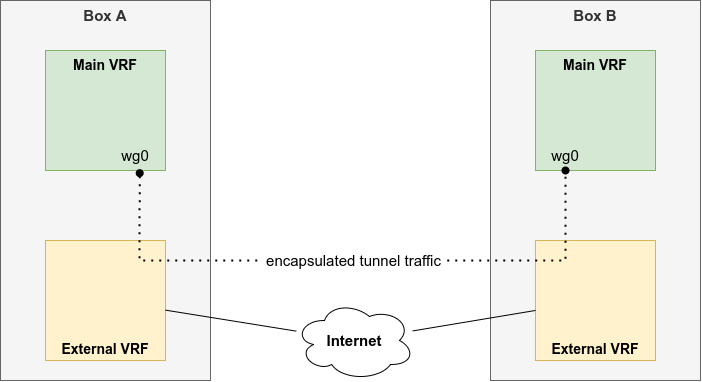

VRFs allow isolating different interfaces at layer 3. In the Freifunk Hochstift network, we chose to consider the main or default VRF as the internal network, and move any Internet-facing interfaces into an external VRF. Following this concept allows to safely contain traffic within the internal network and only at designated border routers leak eligible traffic into the Internet.

In a distributed environment like the Freifunk Hochstift network, it’s inevitable to connect different islands using VPN tunnels over the Internet. This could be done by the means of GRE tunnels, or by means of encrypted VPNs like OpenVPN, IPsec, or WireGuard.

Using an external VRF for any Internet-facing interface presents a challenge: The wg device should be part of the default VRF, so internal routing protocols can connect all parts of the network. However, WireGuard should use the external interface/VRF for the encrypted VPN traffic to other islands.

Trying to add VRF support

Looking through the documentation revealed support for setting firewall marks, which can be evaluated by Linux Policy Routing framework and some examples for working with network namespaces, yet no support for working with VRFs. Checking on the mailing list confirmed this. I was surprised and had parked it for a while, however it’s Open Source, so there’s always the option of patching it, right?

A while later, I let myself be dared to implement VRF support for WireGuard, and made patches for the kernel module (mailing list thread) as well as the wireguard-tools. The patches added a bind-dev option, like for OpenVPN, so WireGuard would do the setsockopt(SO_BINDTODEV) call, to tie things together. Sadly, both patches have been sitting around without any reply since November 2021.

While writing this post, I noticed that Margau did the same some months before me, and also did not get any reply. They also wrote a nice blog article about WireGuard and VRFs, and dive into configuring things with systemd-networkd. If you’re using networkd, you might want to have a look over there, as I’m going for Debian-style ifupdown configuration.

Again, I wasn’t too enthusiastic and let things rest a bit.

FwMark workaround

Still in need for a solution and to move forward with the migration, I went with the fwmark option. As we had to use firewall marks and policy routing for the fastd tunneling daemon anyway, we had all the plumbing in place.

At the end of the day, this boils down to instructing WireGuard to set a fwmark value to all outgoing encapsulated packets. This way, the Linux policy routing framework can be used to direct the route lookups to the VRF’s routing table, and thereby achieving a similar result than with an actual VRF binding.

PBR

To make this work, you need to have an ip rule like the first rule below, which instructs the kernel to use table vrf_external for lookups for all packets, which have the fwmark value 0x1023 set.

# ip rule

999: from all fwmark 0x1023 lookup vrf_external

1000: from all lookup [l3mdev-table]

32766: from all lookup main

32767: from all lookup default

Side note: Usually you’ll use and see a numeric routing table ID after the lookup keyword, however you can configure a mapping to names in /etc/iproute2/rt_tables or a .conf file in the /etc/iproute2/rt_tables.d/ directory:

$ cat /etc/iproute2/rt_tables.d/ffho.conf

# FFHO routing tables (Salt managed)

1023 vrf_external

1042 vrf_mgmt

1100 vrf_oobm

1101 vrf_oobm_ext

To make sure the policy routing rule is configured after a reboot, it is added as a post-up command to the ifupdown-style interface configuration. Note, that you need ifupdown2 or ifupdown-ng to easily make use of VRFs – I’m using the latter. Also note, that you have to add the rule for both address families, IPv4 and IPv6! The following stanza configures the external VRF:

auto vrf_external

iface vrf_external

vrf-table 1023

up ip rule add fwmark 0x1023 table 1023

up ip -6 rule add fwmark 0x1023 table 1023

To make sure, Wireguard’s UDP sockets will inherit the VRF information for received packets, make sure to enable the udp_l3mdev_accept sysctl setting, e.g., in /etc/sysctl.conf or a file of your choice in /etc/sysctl.d/:

net.ipv4.udp_l3mdev_accept = 1

WireGuard config

With policy routing rules in place, we can set up WireGuard tunnels which should use this plumbing. This is pretty straight forward by setting the FwMark attribute in all /etc/wireguard/*.conf files. A WireGuard server’s configuration could look like this:

[Interface]

PrivateKey = VeryVerySecretGibberish

ListenPort = 51001

FwMark = 0x1023

[Peer]

PublicKey = NotSoSecretGibberish

AllowedIPs = 0.0.0.0/0, ::/0

Note, we’re setting AllowedIP to the IPv4 and IPv6 zero-prefixes, so WireGuard won’t do any filtering. We’re using dynamic routing protocols and want to allow all traffic being able to flow through all tunnels.

Network interface config

With ifupdown-ng setting up the tunnels is quite straight forward too. The following interface stanza will start the WireGuard tunnel with its configuration stored in /etc/wireguard/wg-cr02.conf and configured the specified IPs:

auto wg-cr02

iface wg-cr02

use wireguard

up echo 0 > /proc/sys/net/ipv6/conf/$IFACE/addr_gen_mode

#

address 192.0.2.42/31

address 2001:db8:fd26::1/126

WireGuard is setting the addr_gen_mode sysctl setting of all tunnel interfaces to disable the generation of a link-local address. We’re setting it back to the default value of 0, so Linux will generate an IPv6 link-local address for it, which we need to use OSPF over the tunnel.

Summary

Using firewall marks and the Linux policy-based routing framework, you can get a VRF-like setup, to work with different routing domains. Having real VRF support would be nicer, however this works for the time being. Ideally, WireGuard will one day allow binding its sockets to an interface/VRF — the patches are out there.

This setup is in place since May 2022 and moving bits around pretty well. Today, the limit on the APU boards with 4x 1GHz is about 250-300Mb/s.

The tunnels are managed fully in NetBox, created via NetBox scripts semi-automatically, and are fully configured via SaltStack. I’ll dive into the tunnel management in a future article.