A while ago we started getting alerts, that one of our Kerberos KDCs had problem with the Kerberos database replication. A little digging revealed, that the problems are caused by load spikes on the KDC which were the result of a burst of legitimate queries fired by some systems we didn’t have much control over. Additionally we found that the MIT Kerberos implementation queries all KDCs provided in the configuration file in sequential order, so the first KDC get’s nearly all queries. While thinking about load balancing solutions, quickly anycast came to mind, so we decided to set it up. Anycast leverages the Equal Cost Multipath Routing (ECMP) capability of common routers to distribute traffic to multiple next-hops for the same destination.

The solution consists of three corner stones:

- anycast-healtchecker as a means to check service availability

- bird as a BGP speaker on the KDCs and route reflectors

- Data center routers (Cisco Nexus 7010) speaking BGP to the route reflectors

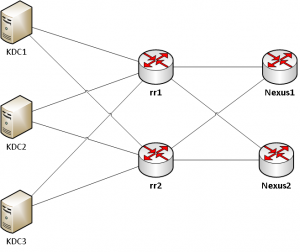

The topology is as follows:

Anycast-Healthchecker

The anycast-healthchecker is a Python-based software suite to check the availability of any number of locally configured services. The software checks if the service IP is configured on the desired interface and if the service is OK. A service is defined within an ini-style configuration file and is considered OK if the check returns a zero exit-code, so your standard Icinga2 checks could be a good start.

The list of all service IPs considered OK are written to a configuration file – one for IPv4 as well as one for IPv6, if configured accordingly – in bird’s prefix list syntax and bird is reconfigured if necessary.

We use the following check defaults within the main configuration file…

# Default configuration values for checks [DEFAULT] interface = anycast_srv check_interval = 3 check_timeout = 2 check_rise = 2 check_fail = 2 check_disabled = false on_disabled = withdraw ip_check_disabled = false

…so the actual check for the Kerberos service is rather compact:

[KDC] check_cmd = /usr/lib/nagios/plugins/check_krb5 -r EXAMPLE.COM -P 88 -H 127.0.0.1 -p nagioskrb5test -k /etc/nagios-plugins/nagioskrb5test.keytab >/dev/null ip_prefix = 192.0.2.88

After restarting the anycast-healthchecker service, it should start writing the list of service IPs named bird_variable to the bird_conf file (both configured in it’s main configuration). If the KDC is configured to be OK, this file could look something like this:

kdc1:~# cat /var/lib/anycast-healthchecker/anycast-prefixes-v4.conf # Generated 2018-02-13 16:37:55.866544 by anycast-healthchecker (pid=10996) # 192.0.2.0/32 is a dummy IP Prefix. It should NOT be used and REMOVED from the constant. define ANYCAST_ADVERTISE = [ 192.0.2.0/32, 192.0.2.88/32 ];

Sadly anycast-healthchecker (and the required python-json-logger library) aren’t part of Debian (yet?), but Debian packages are available at

deb http://apt.ffho.net stretch main contrib non-free

Bird

The Bird Internet Routing Daemon is a popular implementation of OSPF, BGP, and other common routing protocols. On the KDC service machines we use bird 1.6 to read prefixes from the service interface (via the direct protocol) and BGP sessions to export these prefix(es) to the rest of the network.

# Read prefix list from file which anycast-healthchecker generated

include "/var/lib/anycast-healthchecker/anycast-prefixes-v4.conf";

protocol direct anycast_srv {

interface "anycast_srv";

import where net ~ ANYCAST_ADVERTISE;

export none;

}

If you would like to add a special BGP community to your anycast routes, this could be easily done with the following import filter instead of the simple one above.

define ANYCAST_PREFIX = (65049, 999);

import filter {

if net ~ ANYCAST_ADVERTISE then {

bgp_community.add(ANYCAST_PREFIX);

accept;

}

reject;

};

After reconfiguring bird with birdc configure we should already see our service prefix in the bird routing table on the KDC:

kdc1:~# birdc BIRD 1.6.3 ready. bird> show route 192.0.2.88/32 dev anycast_srv [anycast_srv 2018-02-13] * (240)

Now we have to set up BGP session to export this route, to make the service IP known and reachable within our network. Usually these BGP session might be set up directly to the connected routers, which in our case are Cisco Nexus 7010. For the sake of automation I added two bird based route reflectors (RFC) to the mix, which are configured by the central bcfg2 based configuration management and hold sessions to all service nodes as well as to the Nexus 7000 routers leading to this logical topology:

So we end up configuring BGP sessions at three points within the network

BGP on the service nodes

On the service nodes we need iBGP sessions to the route reflectors. We configure these with the following snippets in bird.conf. To simplify the configuration we use birds protocol templating feature to configure common parameters for iBGP sessions only once.

# Our AS number

define AS_OWN = 65049;

define ANYCAST_PREFIX = (65049, 999);

# In contrast to usual iBGP we don't import and export anything here by default!

template bgp ibgp {

import none;

export none;

local as AS_OWN;

enable route refresh on;

graceful restart yes;

}

# Sessions to route reflectors

protocol bgp rr1 from ibgp {

export where ANYCAST_PREFIX ~ bgp_community;

neighbor 172.16.0.11 as AS_OWN;

}

protocol bgp rr2 from ibgp {

export where ANYCAST_PREFIX ~ bgp_community;

neighbor 172.16.0.12 as AS_OWN;

}

BGP on the route reflectors

On the route reflectors we obviously need session to the service nodes. We again create a template containing settings common to all iBGP route reflector client sessions.

template bgp rr_client from ibgp {

import none;

export none;

local as AS_OWN;

enable route refresh on;

graceful restart yes;

rr client;

}

The configuration for the actual session to the service nodes are quite straight forward now. We just have to specify the nodes IP and which anycast IP(s) to accept from this node. The latter is added as a protection layer so any potential misconfiguration or even hacked node speaking BGP can’t influence any other services.

# Sessions to service nodes

protocol bgp kdc1 from rr_client {

import where net ~ [

192.0.2.88/32 # kerberos-kdc

];

neighbor 10.0.0.101 as AS_OWN;

}

protocol bgp kdc1 from rr_client {

import where net ~ [

192.0.2.88/32 # kerberos-kdc

];

neighbor 10.0.0.102 as AS_OWN;

}

protocol bgp kdc3 from rr_client {

import where net ~ [

192.0.2.88/32 # kerberos-kdc

];

neighbor 10.0.0.103 as AS_OWN;

}

After configuring the sessions on both sides they should come up and routes should be exchanged. On the KDC service node this could look like this …

kdc1:~# birdc bird> show protocols name proto table state since info kernel1 Kernel master up 2018-02-13 device1 Device master up 2018-02-13 rr1 BGP master up 2018-02-23 Established rr2 BGP master up 2018-02-23 Established anycast_srv Direct master up 2018-02-13 bird> show route export rr1 192.0.2.88/32 dev anycast_srv [anycast_srv 2018-02-13] * (240) bird> show route export rr2 192.0.2.88/32 dev anycast_srv [anycast_srv 2018-02-13] * (240)

and on the route reflector like this …

rr1:~# birdc bird> show protocols name proto table state since info kernel1 Kernel master up 2018-02-23 device1 Device master up 2018-02-23 kdc1 BGP master up 2018-02-23 Established kdc2 BGP master up 2018-02-23 Established kdc3 BGP master up 2018-02-23 Established bird> show route 192.0.2.88/32 unreachable [kdc1 2018-02-23 from 10.0.0.101] * (100/-) [i] unreachable [kdc2 2018-02-23 from 10.0.0.102] (100/-) [i] unreachable [kdc3 2018-02-23 from 10.0.0.103] (100/-) [i]

The show route output indicates that we received three routes for our anycast prefix – which is what we expected – but the route is marked as unreachable. The reason for the latter is that the BGP recursive next-hop look-up cannot be satisfied by bird as no route to 10.0.0.* is reachable via birds view of the routing table. This isn’t a real problem, as the Nexus routers would be able to successfully and correctly look up the next-hop but it’s confusing and can easily be fixed by learning the default route from the Linux kernel routing table on the route reflectors by activating the learn switch in the kernel protocol and setting an import filter to allow learn the default route. So the protocol kernel stanza in bird.conf could look like this:

protocol kernel {

scan time 20;

import where net = 0.0.0.0/0;

learn;

export none;

}

After reloading the bird configuration with birdc configure we should see something like this

rr1:~# birdc BIRD 1.6.3 ready. bird> show route 0.0.0.0/0 via 172.16.0.1 on ens192 [kernel1 23:34:27] * (10) 192.0.2.88/32 via 172.16.0.1 on ens192 [kdc1 2018-02-23 from 10.0.0.101] * (100/?) [i] via 172.16.0.1 on ens192 [kdc2 2018-02-23 from 10.0.0.102] (100/?) [i] via 172.16.0.1 on ens192 [kdc3 2018-02-23 from 10.0.0.103] (100/?) [i]

Great! Let’s export these routes from the route reflectors to the Nexus routers. On the RR side we have to set up additional sessions like the ones above but with some small differences:

- For safety reasons we only export prefixes which match a predefined prefix list of known anycast prefixes. If you don’t want to use a white list like in the example below – which is highly recommended – at least filter out the default route as some funny things might happen if you don’t!

- Usually a route reflector would only export the best path for any given prefix from the RRs point of view. As we want to exploit ECMP on the Nexus routers, these boxes need to have knowledge of all available paths. That’s were the BGP addpath capablity comes in handy which we activate with the add paths knob.

define ANYCAST_PREFIXES = [ 192.0.2.0/24{32,32} ];

protocol bgp nexus1 from rr_client {

import none;

export where net ~ ANYCAST_PREFIXES;

neighbor 172.16.0.1 as AS_OWN;

add paths tx;

}

protocol bgp nexus2 from rr_client {

import none;

export where net ~ ANYCAST_PREFIXES;

neighbor 172.16.0.2 as AS_OWN;

add paths tx;

}

That’s it on the route reflector side.

BGP on the Nexus 7000 routers

Due to the route reflectors “in the middle” we only have to touch the Nexus boxes once to set up sessions to the RRs. Any further service nodes will only require configuration changes on the RRs, which we will automate away anyways.

On the Nexus boxes we have to make sure, to

- activate the feature bgp

- activate the addpath capability to receive multiple routes via BGP

- set maximum-paths to install multiple paths into the FIB

- only accept routes from the desired anycast prefix(es)

This boils down to the follow NX-OS config snippet:

feature bgp

ip prefix-list anycast seq 5 permit 192.0.2.0/24 le 32

router bgp 65049

log-neighbor-changes

address-family ipv4 unicast

maximum-paths ibgp 8

template peer rr

address-family ipv4 unicast

prefix-list anycast in

maximum-prefix 32

soft-reconfiguration inbound

capability additional-paths receive

neighbor 172.16.0.11 remote-as 65049

inherit peer rr

neighbor 172.16.0.12 remote-as 65049

inherit peer rr

If we configured both RRs and the Nexus box correctly, two sessions should be up

nexus1# show bgp ipv4 unicast summary [...] Neighbor V AS MsgRcvd MsgSent TblVer InQ OutQ Up/Down State/PfxRcd 172.16.0.11 4 65049 17101 14930 41 0 0 2d17h 3 172.16.0.12 4 65049 17069 14930 41 0 0 2d17h 3

and we should receive the routes for the anycast prefix(es)

nexus1# show bgp ipv4 unicast received-paths BGP routing table information for VRF default, address family IPv4 Unicast BGP table version is 41, local router ID is 172.16.0.1 Status: s-suppressed, x-deleted, S-stale, d-dampened, h-history, *-valid, >-best Path type: i-internal, e-external, c-confed, l-local, a-aggregate, r-redist, I-injected Origin codes: i - IGP, e - EGP, ? - incomplete, | - multipath, & - backup Network Next Hop Metric LocPrf Weight Path *|i192.0.2.88/32 10.0.0.101 100 0 i * i 10.0.0.102 100 0 i *|i 10.0.0.103 100 0 i * i 10.0.0.103 100 0 i *>i 10.0.0.102 100 0 i * i 10.0.0.101 100 0 i

which should also be installed into the FIB of the box as well:

nexus1# show ip route 192.0.2.88

IP Route Table for VRF "default"

'*' denotes best ucast next-hop

'**' denotes best mcast next-hop

'[x/y]' denotes [preference/metric]

'%<string>' in via output denotes VRF <string>

192.0.2.88/32, ubest/mbest: 1/0

*via 10.0.0.103, [200/0], 2d17h, bgp-65049, internal, tag 65049,

*via 10.0.0.102, [200/0], 2d17h, bgp-65049, internal, tag 65049,

*via 10.0.0.101, [200/0], 2d17h, bgp-65049, internal, tag 65049,

Et voila, we successfully set up anycast for our KDCs!

Depending on your network topology you might need to set up some kind of redistribution of the anycast prefix(es) into the backbone of your network to ensure reachability. We do this by redistributing static prefixes into OSPF which we already had due to some static routes within the data center plus a pull-up route for the whole anycast prefix (192.0.2.0/24 in this example).